30 Features that Dramatically Improve LLM Performance – Part 3

That’s the third and remaining article on this sequence, that features among the many strongest choices to reinforce RAG/LLM effectivity. Notably: velocity, latency, relevancy (hallucinations, lack of exhaustivity), memory use and bandwidth (cloud, GPU, teaching, number of parameters), security, explainability, along with incremental price for the individual. I revealed the first half proper right here, and the second half proper right here. I utilized these choices in my xLLM system. See particulars in my newest e-book, proper right here.

22. Self-tuning and customization

In case your LLM – or part of it harking back to a sub-LLM – is delicate ample so that your backend tables and token lists slot in memory occupying little home, then it opens up many prospects. As an illustration, the flexibleness to fine-tune in precise time, each by means of automated algorithms, or by letting the end-user doing it on his private. The latter is in the marketplace in xLLM, with intuitive parameters: when fine-tuning, chances are you’ll merely predict the influence of decreasing or rising some values. In the long term, two prospects with the an identical rapid may get utterly completely different outcomes if working with utterly completely different parameter items. It ends in a extreme stage of customization.

Now in case you’ve gotten numerous prospects, with a few hundred allowed to fine-tune the parameters, chances are you’ll purchase worthwhile information. It turns into easy to detect the favored mixtures of values from the personalised parameter items. The system can auto-detect the proper parameter values and supply a small alternative as default or useful mixtures. Further could be added over time based mostly totally on individual alternative, leading to pure reinforcement leaning and self-tuning.

Self-tuning should not be restricted to parameter values. Some metrics harking back to PMI (a various to the dot product and cosine similarity) rely upon some parameters which may be fine-tuned. Nevertheless even your entire formulation itself (a Python carry out) could be personalised.

23. Native, world parameters, and debugging

In multi-LLM strategies (sometimes known as mixture of consultants), whether or not or not you might have a dozen or a complete lot of sub-LLMs, chances are you’ll optimize each sub-LLM regionally, or your entire system. Each sub-LLM has its private backend tables to deal with the specialised corpus that it covers, normally a primary class. Nonetheless, you might have each native or world parameters:

- World parameters are an an identical for all sub-LLMs. They might not perform along with native parameters, nonetheless they’re easier to maintain. Moreover, they’re typically tougher to fine-tune. Nonetheless, chances are you’ll fine-tune them first on select sub-LLMs, sooner than deciding on the parameter set that on widespread, performs most interesting all through plenty of high-usage sub-LLMs.

- Native parameters improve effectivity nonetheless require further time to fine-tune, as each sub-LLM has a particular set. On the very least, it’s worthwhile to consider using ad-hoc stopwords lists for each sub-LLM. These are constructed by prime tokens earlier to filtering or distillation, and letting an skilled determine which tokens are worth ignoring, for the topic coated by the sub-LLM in question.

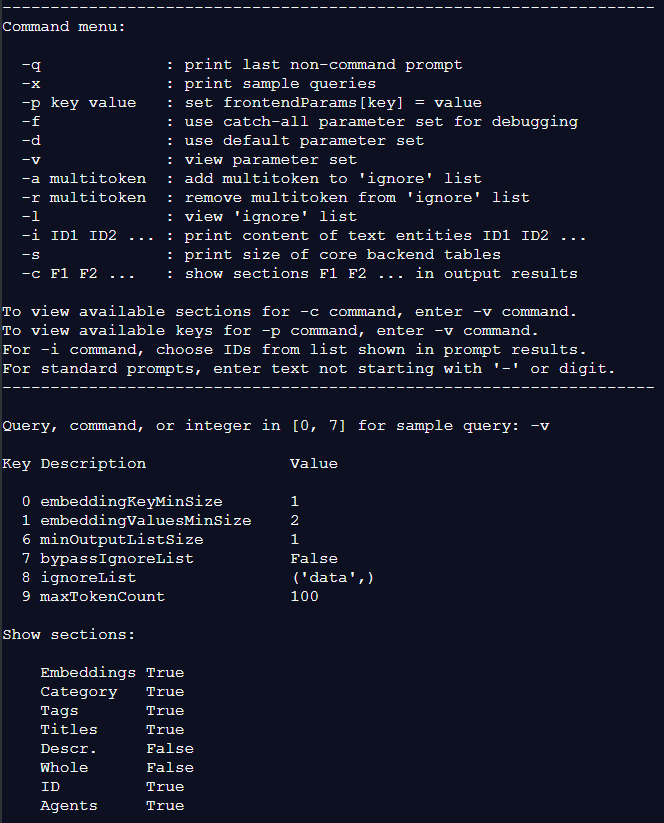

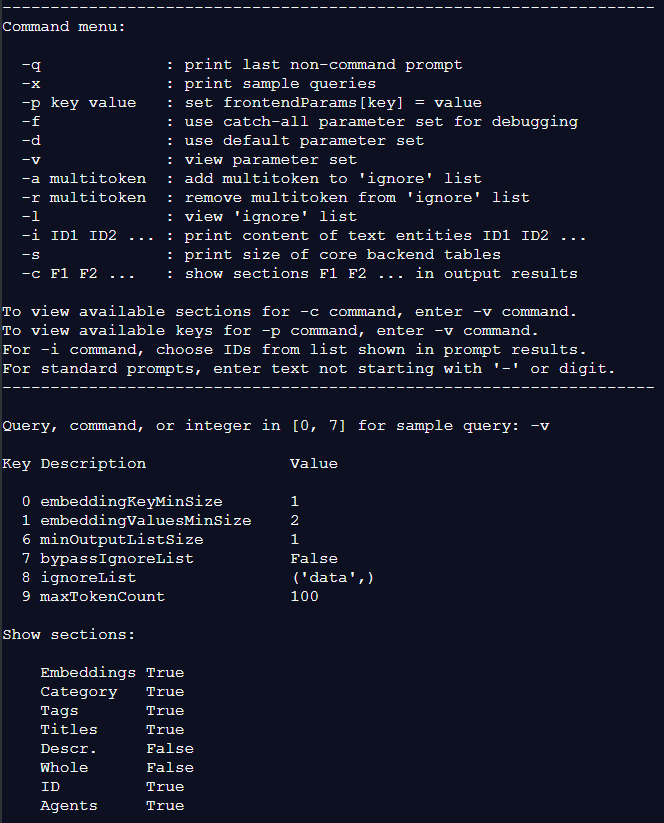

You’ll have a mix of world and native parameters. In xLLM, there is a catch-all parameter set that returns the utmost output chances are you’ll in all probability get from any rapid. It is the an identical for all sub-LLMs. See alternative -f on the command rapid menu in Decide 1. It’s best to make the most of this parameter set as begin line and modify values until the output is concise ample and reveals primarily probably the most associated devices on the prime. The-f alternative can be utilized for debugging.

24. Displaying relevancy scores, and customizing scores

By displaying a relevancy ranking to each merchandise returned to a rapid, it helps the individual determine which gadgets of information are most valuable, or which textual content material entities to view (similar to deciding whether or not or not clicking on a hyperlink or not, in conventional search). It moreover helps with fine-tuning, as scores rely upon the parameter set. Lastly, some devices with lower ranking is also of specific curiosity to the individual; it is necessary to not return prime scores solely. We’re all acquainted with Google search, the place primarily probably the most worthwhile outcomes normally do not current up on the prime.

In the mean time, this attribute should not be however utilized in xLLM. Nonetheless, many statistics are hooked as much as each merchandise, from which one can assemble a ranking. The PMI is definitely considered one of them. Inside the subsequent mannequin, a personalized ranking will be added. Equivalent to the PMI carry out, it can possible be one different carry out that the individual can customise.

25. Intuitive hyperparameters

In case your LLM is powered by a deep neural neighborhood (DNN), parameters are known as hyperparameters. It is not obvious tips about find out how to fine-tune them collectively till you might have considerable experience with DNNs. Since xLLM relies on explainable AIparameters– whether or not or not backend or frontend– are intuitive. Chances are you’ll merely predict the affect of decreasing or rising values. Actually, the end-user is allowed to play with frontend parameters. These parameters normally put restrictions on what to point out throughout the outcomes. As an illustration:

- Minimal PMI threshold: completely the minimal is zero, and counting on the PMI carry out, the utmost is one. Do not present content material materials with a PMI beneath the required threshold.

- Single tokens to ignore because of they’re current in too many textual content material entities, as an illustration ‘information’ or ‘desk’. This does not forestall ‘information governance’ from displaying up, as it is a multitoken of its private.

- Most gap between two tokens to be considered related. Moreover, document of textual content material separators to determine textual content material sub-entities (a relation between two tokens is established anytime they’re current in a an identical sub-entity).

- Most number of phrases allowed per multitoken. Minimal and most phrase rely for a token to be built-in throughout the outcomes.

- Amount of improve in order so as to add to tokens which could be moreover found throughout the info graph, taxonomy, title, or courses. Amount of stemming allowed.

26. Sorted n-grams and token order preservation

To retrieve information from the backend tables as a technique to reply an individual query (rapid), the first step consists of cleaning the query and breaking it down into sub-queries. The cleaning consists of eradicating stopwords, some stemming, together with acronyms, auto-correct and so forth. Then solely maintain the tokens found throughout the dictionary. The dictionary is a backend desk constructed on the augmented corpus.

Let’s say that after cleaning, we now have acknowledged a subquery consisting of tokens A, B, C, D, in that order throughout the genuine rapid. As an illustration (A, B, C, D) = (‘new’, ‘metadata’, ‘template’, ‘description’). The next step consists in all 15 mixtures of any number of these tokens, sorted in alphabetical order. As an illustration, ‘new’, ‘metadata new’, ‘description metadata’, ‘description metadata template’. These mixtures are known as sorted n grams. Inside the xLLM construction, there is a key-value backend desk, the place the recent button is a sorted n-gram. And the price is an inventory of multitokens found throughout the dictionary (that is, throughout the corpus), obtained by rearranging the tokens throughout the dad or mum key. For a key to exist, as a minimum one rearrangement needs to be throughout the dictionary. In our occasion, ‘description template’ is a key (sorted n-gram) and the corresponding price is an inventory consisting of 1 ingredient: ‘template description’ (a multitoken).

This type of construction indirectly preserves to a giant extent the order throughout which tokens current up throughout the rapid, whereas looking out for all potential re-orderings in a very atmosphere pleasant means. In addition to, it permits you to retrieve throughout the corpus an important textual content material ingredient (multitoken) matching, as a lot as token order, the textual content material throughout the cleaned rapid. Even when the individual entered a token not found throughout the corpus, supplied that an acronym exists throughout the dictionary.

27. Mixing commonplace tokens with tokens throughout the info graph

Inside the corpus, each textual content material entity is linked to some info graph parts: tags, title, class, dad or mum class, related devices, and so forth. These are found whereas crawling and embody textual content material. I combine them with the odd textual content material. They end up throughout the embeddings, and contribute to enhance the multitoken associations, in a method similar to augmented information. As well as they add contextual information not restricted to token proximity.

28. Boosted weights for knowledge-graph tokens

Not all tokens are created equal, even these with an an identical spelling. Location is very very important: tokens can come from odd textual content material, or from the data graph, see attribute # 27. The latter on a regular basis yields bigger prime quality. When a token is found whereas parsing a textual content material entity, its counter is incremented throughout the dictionary, normally by 1. Nonetheless, throughout the xLLM construction, chances are you’ll add an extra improve to the increment if the token is found throughout the info graph, versus odd textual content material. Some backend parameters may also help you choose how rather a lot improve in order so as to add, counting on whether or not or not the graph ingredient is a tag, class, title, and so forth. One different method is to utilize two counters: one for the number of occurrences in odd textual content material, and a separate one for occurrences in info graph.

29. Versatile command rapid

Most enterprise LLM apps that I am acquainted with present restricted selections along with the standard rapid. Optimistic, chances are you’ll enter your corpus, work with an API or SDK. In some circumstances, chances are you’ll choose specific deep neural neighborhood (DNN) hyperparameters for fine-tuning. Nevertheless for primarily probably the most half, they proceed to be a Blackbox. One in every of many foremost causes is that they require teaching, and training is a laborious course of when dealing with DNNs with billions of weights.

With in-memory LLMs harking back to xLLM, there is not a training. Optimistic-tuning is kind of a bit easier and faster, due to explainable AI: see attribute # 25. Together with commonplace prompts, the individual can enter command selections throughout the rapid discipline, as an illustration -p key price to assign price to parameter key. See Decide 1. The next rapid will be based mostly totally on the model new parameters, with none delay to return outcomes based mostly totally on the model new configuration.

There are numerous completely different selections along with fine-tuning: an agent discipline allowing the individual to determine on a specific agent, and a category discipline to determine on which sub-LLMs it’s worthwhile to purpose. Chances are you’ll even confirm the sizes of the precept tables (embeddings, dictionary, contextual), as they rely upon the backend parameters.

30. Improve prolonged multitokens and unusual single tokens

I exploit utterly completely different mechanisms to current further significance to multitokens consisting of as a minimum two phrases. The higher the number of phrases, the higher the importance. As an illustration, if a cleaned rapid includes the multitokens (A, B), (B, C), and (A, B, C) and all have the an identical rely throughout the dictionary (this happens incessantly), xLLM with present outcomes related to (A, B, C) solely, ignoring (A, B) and (B, C). Some frontend parameters may also help you set the minimal number of phrases per multitoken, to stay away from returning generic outcomes that match just one token. Moreover, the PMI metric could be personalised to favor prolonged multi-tokens.

Converserly, some single tokens, even consisting of 1 or two letters counting on the sub-LLM, is also pretty unusual, indicating that they’ve extreme informative price. There’s an alternative in xLLM to not ignore single tokens with fewer than a specified number of occurrences.

Remember that in numerous LLMs obtainable in the marketplace, tokens are very transient. They embody parts of a phrase, not even a full phrase, and multitokens are absent. In xLLM, very prolonged tokens are favored, whereas tokens which could be decrease than a phrase, don’t exist. However, digits like 1 or 2, single letters, IDs, symbols, codes, and even specific characters could be token in the event that they’re found as is throughout the corpus

Regarding the Author

Vincent Granville is a pioneering GenAI scientist and machine finding out skilled, co-founder of Data Science Central (acquired by a publicly traded agency in 2020), Chief AI Scientist at MLTechniques.com and GenAItechLab.comformer VC-funded govt, author (Elsevier) and patent proprietor — one related to LLM. Vincent’s earlier firm experience consists of Visa, Wells Fargo, eBay, NBC, Microsoft, and CNET. Observe Vincent on LinkedIn.