LLM Chunking, Indexing, Scoring and Agents, in a Nutshell

It is becoming a bit troublesome to adjust to all of the model new AI, RAG and LLM terminology, with new concepts popping up just about every week. Which ones are mandatory, and proper right here to stay? Which ones are merely outdated stuff rebranded with a model new establish for promoting and advertising and marketing features? On this new sequence, I start with chunking, indexing, scoring, and brokers. These phrases can have completely totally different meanings counting on the context. It is noteworthy that not too way back, No-GPU specialised LLMs are gaining considerable traction. That may be very true for enterprise variations. My dialogue proper right here depends on my experience setting up such fashions, from scratch. It applies every to RAG and LLM.

Chunking

All big language fashions and RAG apps use a corpus or doc repository as enter provide. For instance, excessive Internet web pages, or an organization corpus. LLMs produce good grammar and English prose, whereas RAG strategies cope with retrieving useful knowledge in a strategy similar to Google search, nonetheless considerably higher and with a radically completely totally different technique. Each strategy, when setting up such fashions, certainly one of many very first steps is chunking.

As a result of the establish signifies, chunking consists in splitting the corpus in small textual content material entities. In some strategies, textual content material entities have a tough and quick measurement. Nonetheless, a better decision is to utilize separators. On this case, textual content material entities are JSON components, components of an web net web page, paragraphs, sentences, or subsections in PDF paperwork. In my xLLM system, textual content material entities, other than customary textual content material, actually have a variable number of contextual fields resembling brokers, lessons, guardian lessons, tags, related devices, titles, URLs, footage, and so forth. An important parameter is the standard measurement of these textual content material entities. In xLLM, chances are you’ll optimize the size by real-time fine-tuning.

Indexing

Chunking and indexing go collectively. By indexing, I suggest assigning an ID (or index) to each textual content material entity. That’s completely totally different from mechanically creating an index in your corpus, a exercise known as auto-indexing and shortly obtainable in xLLM, along with cataloging.

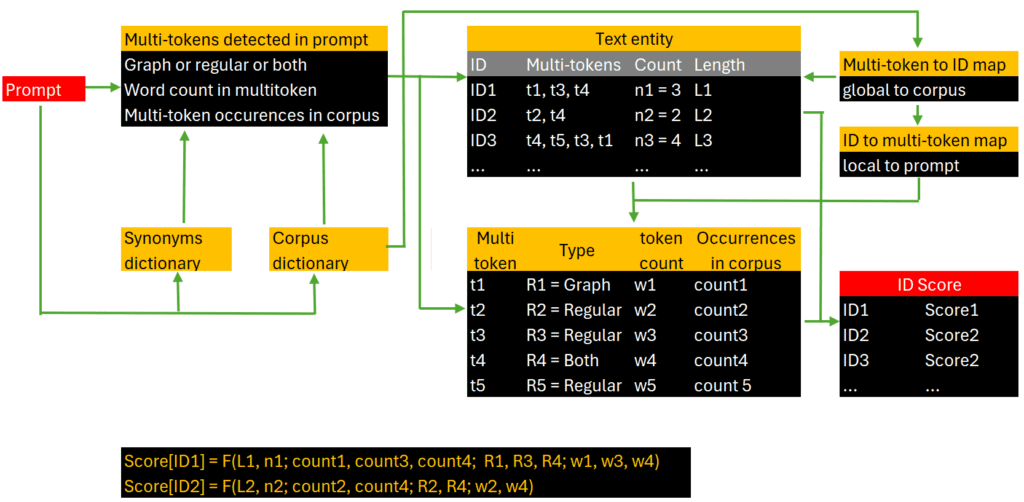

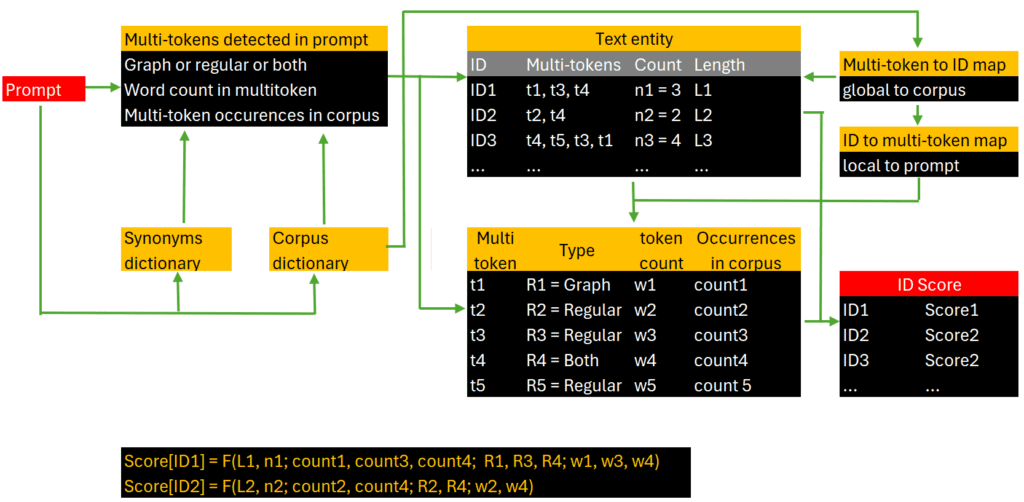

The aim is to successfully retrieve associated textual content material entities saved in a database, because of the textual content material entity IDs accessible in memory. Particularly, a backend desk maps each corpus token (multi-token for xLLM) to the guidelines of textual content material entities that comprise them. Textual content material entities are represented by their IDs. Then, when processing a speedy, you first extract speedy tokens, match them to tokens inside the corpus, and in the end to textual content material entities that comprise them, using a neighborhood transposed mannequin of the backend desk in question, restricted to speedy tokens. The highest consequence’s a list of candidate textual content material entities to pick out from (or to combine and change into prose), when displaying speedy outcomes.

Brokers

Usually the phrase agent is synonym to movement: composing an e-mail, fixing a math downside, or producing and dealing some Python code as requested by the particular person. On this case, LLM might use an exterior API to execute the movement. Nonetheless, proper right here, by agent, I suggest the sort of knowledge the particular person wishes to retrieve: a summary, definitions, URLs, references, most interesting practices, PDFs, datasets, footage, instructions about filling a form, and so forth.

Customary LLMs try to guess the intent of the particular person from the speedy. In short, they mechanically assign brokers to a speedy. Nonetheless, a better totally different consists in offering a variety of potential brokers to the particular person, inside the speedy menu. In xLLM, brokers are assigned post-crawling to textual content material entities, as certainly one of many contextual fields. Agent mission is carried out with a clustering algorithm utilized to the corpus, or by straightforward pattern matching. Each strategy, it is carried out very early on inside the backend construction. Then, it is easy to retrieve textual content material entities matching specific brokers coming from the speedy.

Relevancy Scores

If a speedy triggers 50 candidate textual content material entities, you might choose a subset to indicate inside the speedy outcomes, to not overwhelm the particular person. Even when the amount is way smaller, it is nonetheless suggestion to utilize scores and current them in speedy outcomes: it gives the particular person an considered how assured your LLM is about its reply as an entire, or about components of the reply. To achieve this objective, you assign a score to each candidate textual content material entity.

In xLLM, the computation of the relevancy score, for a particular speedy and a particular textual content material entity, is a combination of the following components. Each of these components has its private sub-score.

- The number of speedy multi-tokens present inside the textual content material entity.

- Multi-tokens consisting of a number of phrase and unusual multi-tokens (with low incidence inside the corpus) enhance the relevancy score.

- Quick multi-tokens present in a contextual topic inside the textual content material entity (class, title, or tag) enhance the relevancy score.

- The size of the textual content material entity has an affect on the score, favoring prolonged over transient textual content material entities.

You then create a world score as follows, for each candidate textual content material entity, with respect to the speedy. First, you form textual content material entities individually by each sub-score. You make the most of the sub-ranks ensuing from this sorting, reasonably than the exact values. You then compute a weighted combination of these sub-ranks, for normalization features. That’s your remaining score. Lastly, in speedy outcomes, you present textual content material entities with a world score above some threshold, or (say) the very best 5. I title it the model new “PageRank” for RAG/LLMa substitute of the Google know-how to find out on what to point to the particular person, for specific queries.

For detailed documentation, provide code and sample enter corpus (Fortune 100 agency), see my books and articles on the topic, notably the model new e-book “Establishing Disruptive AI & LLM Experience from Scratch”, listed on the excessive, proper right here.

Regarding the Author

Vincent Granville is a pioneering GenAI scientist and machine learning expert, co-founder of Data Science Central (acquired by a publicly traded agency in 2020), Chief AI Scientist at MLTechniques.com and GenAItechLab.comformer VC-funded govt, author (Elsevier) and patent proprietor — one related to LLM. Vincent’s earlier firm experience consists of Visa, Wells Fargo, eBay, NBC, Microsoft, and CNET. Adjust to Vincent on LinkedIn.