There is no such thing as a Trained LLM

What I indicate proper right here is that typical LLMs are expert on duties irrelevant to what they might do for the particular person. It’s like teaching a airplane to successfully operate on the runway, nevertheless to not fly. Briefly, it is just about unattainable to educate an LLM, and evaluating is solely as tough. Then, teaching is simply not even essential. On this text, I dive on all these topics.

Teaching LLMs for the fallacious duties

As a result of the beginnings with Bert, teaching an LLM generally consists of predicting the next tokens in a sentence, or eradicating some tokens after which have your algorithm fill the blanks. You optimize the underlying deep neural networks to hold out these supervised learning duties along with attainable. Typically, it entails rising the report of tokens inside the teaching set to billions or trillions, rising the worth and time to educate. However, recently, there is a tendency to work with smaller datasets, by distilling the enter sources and token lists. Finally, out of 1 trillion tokens, 99% are noise and do not contribute to enhancing the outcomes for the end-user; they could even contribute to hallucinations. Evidently human beings have a vocabulary of about 30,000 key phrases, and that the number of potential standardized prompts on a specialised corpus (and thus the number of potential options) is decrease than 1,000,000.

Additional points linked to teaching

In addition to, teaching relies on minimizing a loss function that is solely a proxy to the model evaluation function. So, you don’t even really optimize subsequent token prediction, itself a job unrelated to what LLMs ought to perform for the particular person. To straight optimize the evaluation metric, see my technique on this text. And whereas I don’t design LLMs for subsequent token prediction, see one exception proper right hereto synthesize DNA sequences.

The actual fact is that LLM optimization is an unsupervised machine learning draw back, thus in all probability not amenable to teaching. I study it to clustering, versus supervised classification. There is not a wonderful reply except for trivial situations. Throughout the context of LLMs, laymen may like OpenAI increased than my very personal experience, whereas the converse is true for busy enterprise professionals and superior prospects. Moreover, new key phrases keep coming continuously. Acronyms and synonyms that map to key phrases inside the teaching set however absent inside the corpus, are usually ignored. All this extra complicates teaching.

Analogy to clustering algorithms

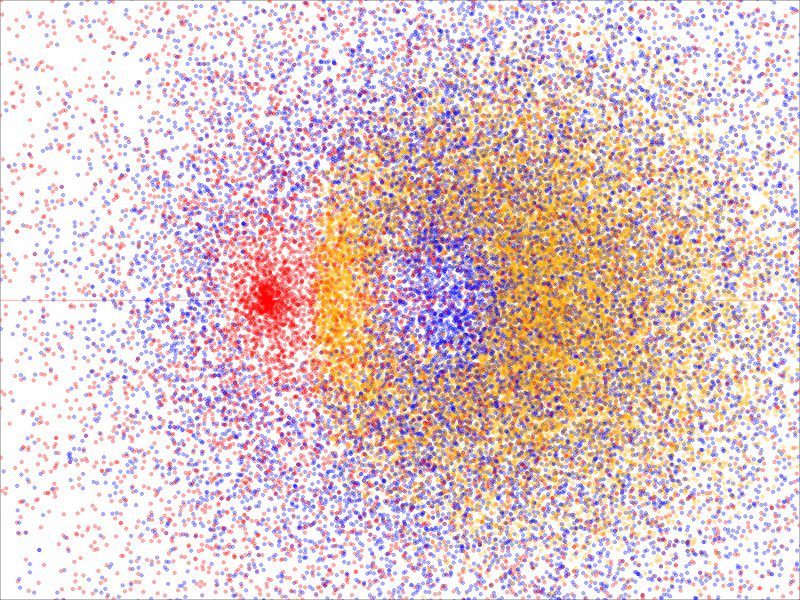

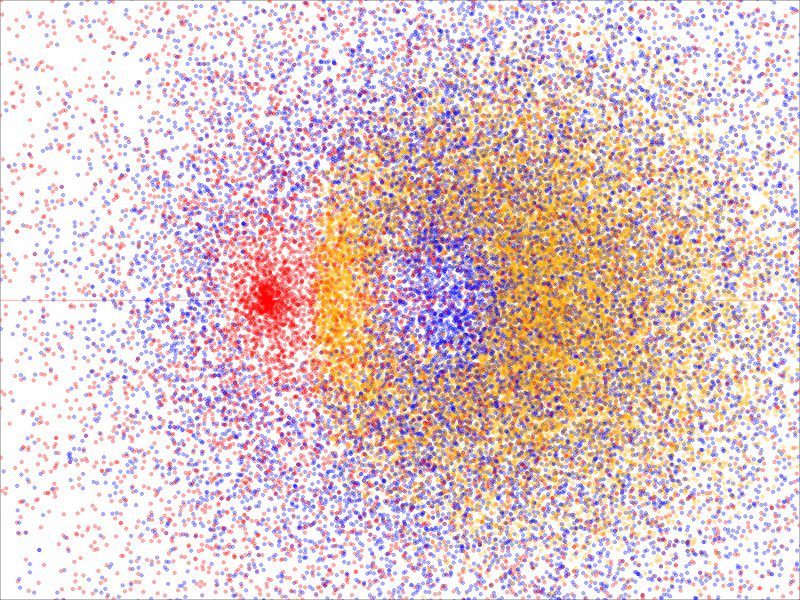

For example, this time inside the context of clustering, see Decide 1. It incorporates a dataset with three clusters. What variety of do you see, if all the dots had the an identical color? Ask one other particular person, and it is attainable you will get a novel reply. What characterizes each of these clusters? You can’t put together an algorithm to precisely set up all cluster constructions, though you probably can for very explicit circumstances like this one. The an identical is true for LLMs. Apparently, some distributors declare that their LLM can treatment clustering points. I is likely to be blissful to see what they’re saying in regards to the dataset in Decide 1.

For these , the dots in Decide 1 signify elements on three utterly totally different orbits of the Riemann zeta function. The blue and pink ones are related to the very important band. The pink dots correspond to the very important line with infinitely many roots. It has no hole. The yellow dots are on an orbit exterior the very important band; the orbit in question is bounded (in distinction to the other ones) and has a spot in the midst of the picture. The blue orbit moreover appears to not cowl the origin the place pink dots are concentrated; its hole center — the “eye” as inside the eye of a hurricane — is on the left side, to the right of the dense pink cluster nevertheless left to the center of the outlet inside the yellow cloud. Proving that its hole encompasses the origin, portions to proving the Rieman Hypothesis. The blue and pink orbits are unbounded.

Can LLMs decide this out? Can you put together them to answer such questions? The reply isn’t any. They could succeed if appropriately expert on this very sort of occasion nevertheless fail on a dataset with a novel development (the equal of a novel kind of instant). One goal why it’s smart to assemble specialised LLMs reasonably than generic ones.

Evaluating LLMs

There are fairly a couple of evaluation metrics to guage the usual of an LLM, each measuring a particular sort of effectivity. It is harking again to try batteries for random amount mills (PRNGs) to guage how random the generated numbers are. Nevertheless there is a essential distinction. Throughout the case of PRNGs, the aim output has a recognized, prespecified uniform distribution. The reason to utilize so many assessments is because of no person has however utilized a widespread check outbased as an illustration on the whole multivariate Kolmogorov-Smirnov distance. Actually, I did recently and may publish an article about it. It even has its Python library, proper right here.

For LLMs that reply arbitrary prompts and output English sentences, there is no attainable widespread check out. Even supposing LLMs are expert to precisely replicate the whole multivariate token distribution — a difficulty very similar to PRNGs thus with a typical evaluation metric — the aim is simply not token prediction, and thus evaluation requirements ought to be utterly totally different. There’ll on no account be a typical evaluation metric except for very peculiar circumstances, akin to LLMs for predictive analytics. Beneath is a listing of important choices lacking in current evaluation and benchmarking metrics.

Missed requirements, onerous to measure

- Exhaustivity: Talent to retrieve utterly every associated merchandise, given the restricted enter corpus. Full exhaustivity is the flexibleness to retrieve all of the issues on a particular matter, like chemistry, even when not inside the corpus. This may possible comprise crawling in real-time to answer the instant.

- Inference: Talent to invent acceptable options for points with no recognized reply, as an illustration proving or making a theorem. I requested GPT if the sequence (an) is equidistributed modulo 1 if a = 3/2. Instead of providing references as I had hoped — it usually would not — GPT created a short proof on the fly, and by no means just for a = 3/2; Perplexity failed at that. Utilizing synonyms and hyperlinks between information components can help receive this goal.

- Depth, conciseness and disambiguation is not going to be easy to measure. Some prospects see prolonged English sentences superior to concise bullet lists grouped into sections inside the instant outcomes. For others, the opposite is true. Consultants need depth, nevertheless it might be overwhelming to the layman. Disambiguation might be achieved by asking the particular person to determine on between utterly totally different contexts; this choice is simply not accessible in most LLMs. In consequence, these qualities are usually not typically examined. The right LLMs, individually, combine depth and conciseness, providing in lots a lot much less textual content material far more data than their rivals. Metrics based on entropy would possibly help on this context.

- Ease of use isn’t examined, as all UIs embody a simple search area. However, xLLM is totally totally different, allowing the particular person to choose brokers, damaging key phrases, and sub-LLMs (subcategories). It moreover means that you could fine-tune or debug in real-time due to intuitive parameters. Briefly, it is additional user-friendly. For particulars, see proper right here.

- Security could be troublesome to measure. Nevertheless LLMs with native implementation, not relying on exterior APIs and libraries, are generally safer. Lastly, evaluation metric ought to remember the relevancy scores displayed inside the outcomes. As of now, solely xLLM exhibits such scores, telling the particular person how assured it is in its reply.

Benefits of untrained LLMs

In view of the earlier arguments, one may shock if teaching is essential, notably since teaching is simply not completed to supply increased options, nevertheless to increased predict the next tokens. With out teaching, its heavy computations and black-box deep neural networks gear, LLMs might be far more atmosphere pleasant however far more right. To generate options in English prose based on the retrieved supplies, one may use template, pre-made options the place key phrases might be plugged in. This may flip specialised sub-LLMs into massive Q&A’s with 1,000,000 questions and options defending all potential inquiries. And ship precise ROI to the buyer by not requiring GPU and dear teaching.

That’s the reason I created xLLM, and the reason why I title it LLM 2.0with its radically utterly totally different construction and next-gen choices along with the distinctive UI. I describe it intimately in my newest e-book “Establishing Disruptive AI & LLM Experience from Scratch”, accessible proper right here. Additional evaluation papers could be discovered proper right here.

That said, xLLM nonetheless requires fine-tuning, for back-end and front-end parameters. It moreover permits the particular person to verify and select his favorite parameters. Then, it blends the popular ones to mechanically create default parameter models. I title it self-tuning. It is in all probability the one reinforcement learning method.

Regarding the Creator

Vincent Granville is a pioneering GenAI scientist and machine learning expert, co-founder of Data Science Central (acquired by a publicly traded agency in 2020), Chief AI Scientist at MLTechniques.com and GenAItechLab.comformer VC-funded govt, creator (Elsevier) and patent proprietor — one related to LLM. Vincent’s earlier firm experience consists of Visa, Wells Fargo, eBay, NBC, Microsoft, and CNET. Adjust to Vincent on LinkedIn.