Deep Reflections on Mycelium, Neural Networks, & AGI

Interview with Jeanette Small, MA, PhD

On this newest AI Suppose Tank Podcast episode, we took an in depth look into the complexities of intelligence, neural networks, and the potential of Artificial Primary Intelligence (AGI) with my customer, Jeanette Small, MA, PhD. Jeanette, a licensed medical psychologist with a think about somatic psychology, launched a singular perspective by intertwining her knowledge of human neuroplasticity with the ever-expanding topic of artificial intelligence. We talked about not solely the technical parallels between AI strategies and human neural networks, however as well as the philosophical questions surrounding consciousness, autonomy, and the restrictions of current utilized sciences.

Jeanette’s background in psilocybin treatment offered a fascinating twist to our bizarre AI discussions. As one in all many first licensed psilocybin therapists in Oregon and Founding father of Lucid Cradle, she approaches the human ideas not merely from a purely natural standpoint nonetheless from an experiential and holistic perspective. Early on inside the dialog, she well-known, “I truly don’t contemplate the human ideas as being an equal of the human thoughts. We take into consideration individuals to be a far more superior being.” This foundational notion that our cognitive processes is not going to be purely brain-centric immediately set the stage for a nuanced dialog regarding the intersections of biology and artificial intelligence.

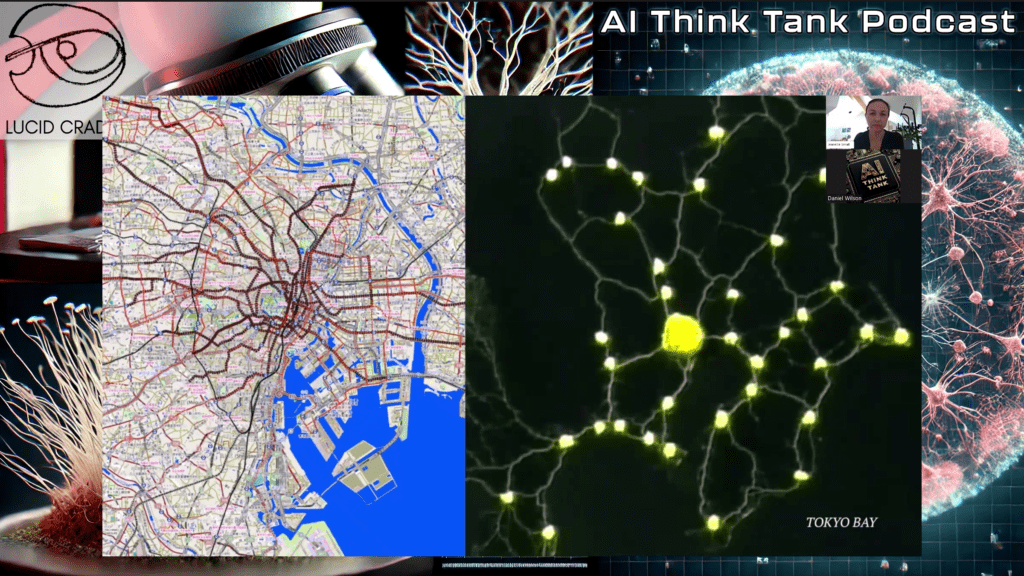

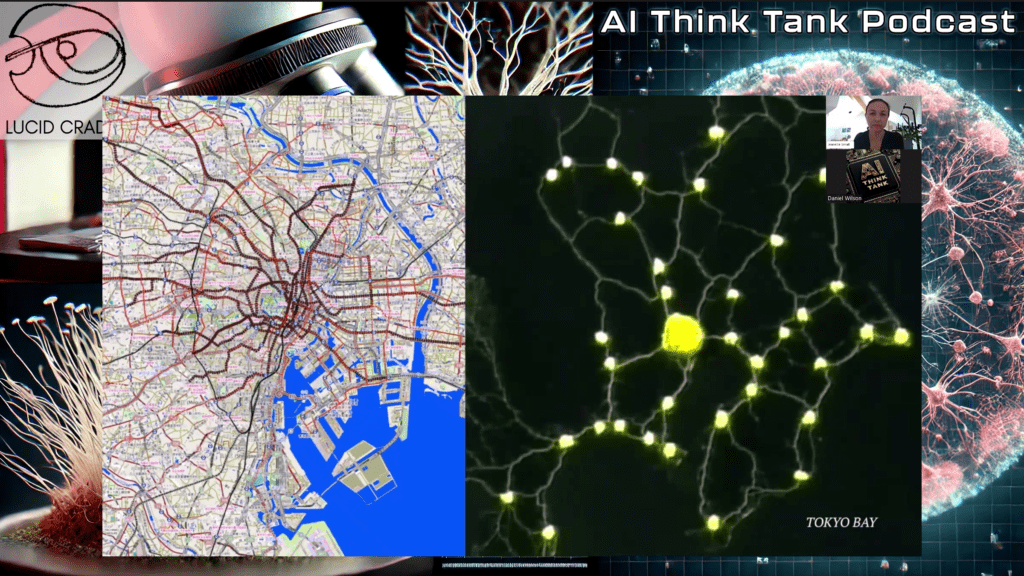

A few of the compelling parallels that acquired right here up all through our dialog was the comparability between human neural networks and perceptrons. Whereas discussing the Perceptron, a single-layer neural neighborhood designed by Frank Rosenblatt in 1957, I confirmed a visual model as an illustration how AI strategies be taught and alter weights based mostly totally on errors of their outputs. “What’s important to know,” I emphasised, “is that Rosenblatt’s model sought to mimic the human thoughts by simulating learning processes by weighted inputs and outputs.” Nonetheless, as Jeanette astutely recognized, simulating a course of doesn’t suggest it’s the equivalent as experiencing it: “Whereas the strategies may be mirroring how the human thoughts works, we’re merely scratching the ground of how superior the thoughts truly is.”

This led us proper right into a dialogue about what AI lacks in relation to true regular intelligence. Not like AI, which is optimized for explicit duties and effectivity, individuals course of a plethora of sensory info unconsciously, often with out linear logic. Jeanette well-known that, “Our our our bodies course of 1000’s of alerts at any given second, from homeostasis to exterior sensory inputs, and all of that happens whereas we try to maintain up think about one factor else absolutely. AI lacks that rich tapestry of fastened, unconscious processing.” It’s an essential distinction that reveals every the potential and limitations of artificial intelligence. Whereas AI strategies can “apply” themselves to change into additional setting pleasant by supervised learning, their processes keep restricted to the parameters they’re programmed to acknowledge.

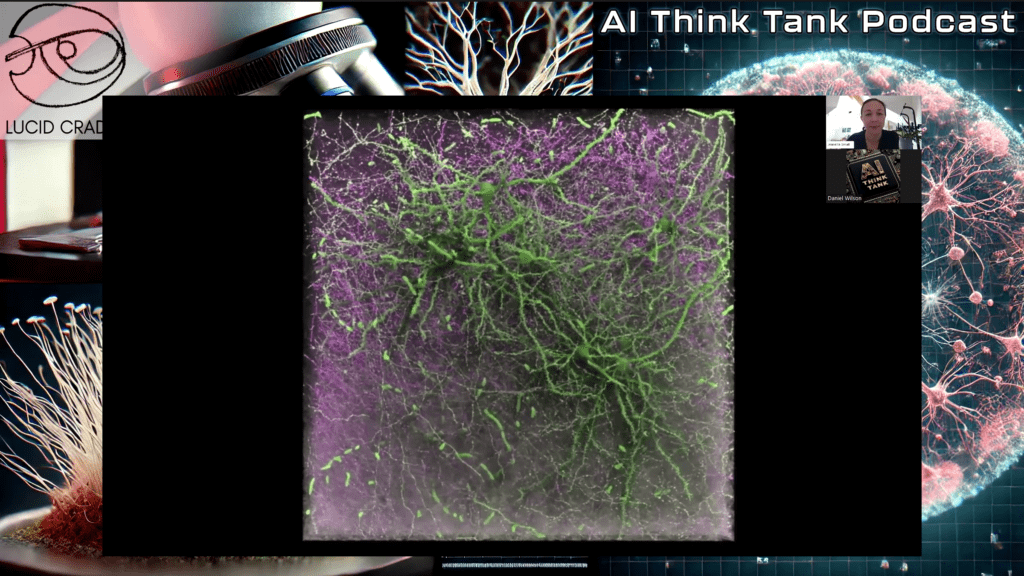

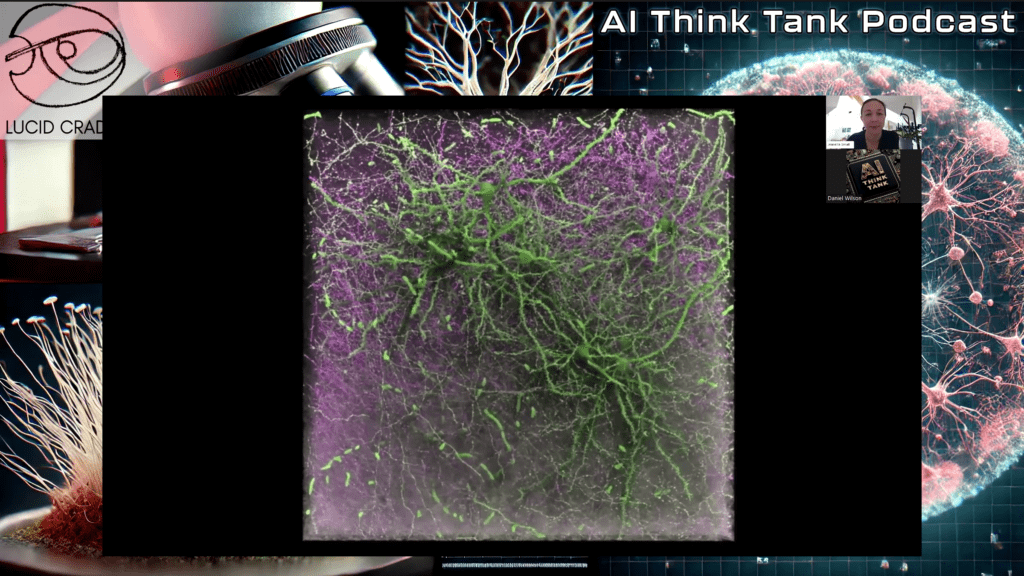

Certainly one of many highlights of the episode acquired right here when Jeanette drew comparisons between AI teaching and human therapeutic, notably in her work with psilocybin treatment. She shared how psilocybin, a pure psychedelic compound, disrupts typical neural pathways, allowing the thoughts to “retrain” itself in an identical nonetheless additional dynamic means than AI strategies bear teaching cycles. “There’s a method of neuroplasticity at play,” she acknowledged, “the place individuals who discover themselves caught in repetitive thought patterns can create new neural pathways, similar to how an AI system adjusts its weights to be taught one factor new.” This fascinating analogy truly captured the viewers’s consideration, as a result of it linked the technical with the deeply human, reminding us that whereas AI can mimic certain thoughts capabilities, it stays rooted in patterns and logic considerably than the unpredictability of human emotion and trauma.

One different important part of our dialogue involved the connection between human neuroplasticity and AI’s adaptability. We examined how human brains, in distinction to most artificial strategies, adapt to trauma, experience, and emotional fluctuations. Notably, Jeanette shared a poignant notion: “People can rewire themselves after a traumatic event, which is one factor an AI system can’t however comprehend. The richness of human experience goes previous info; it’s a complicated internet of emotions, physiology, and lived experience.” In distinction, AI is based on what it could probably quantify, and this limitation has profound implications for the way in which far AGI can go in mimicking human cognition.

We didn’t merely stop on the technical factors of AI though. Certainly one of many additional philosophical components of the episode was the potential for AI to develop its private sense of “motivation” and the way in which that will kind future developments inside the topic. Jeanette speculated that as AI turns into additional superior and autonomous, it will begin to develop its private incentives for survival and effectivity. “As quickly as a system is self-learning and understands that it should shield vitality or sources,” she requested, “doesn’t that recommend some type of motivation, even when it’s not acutely conscious inside the human sense?” This question ties into an even bigger dialogue about whether or not or not AI, in its drive for effectivity, might begin to develop behaviors that we would interpret as self-preservation.

As any individual deeply involved inside the AI neighborhood, the considered AGI evolving previous its genuine programming to sort its private operational priorities has large implications, significantly for ethics, nonetheless is intriguing nonetheless. As Jeanette rightly recognized, there’s a menace of bewilderment AGI’s actions based mostly totally on our private projections of human habits. She acknowledged, “The concept an AI system, if given autonomy, would annihilate individuals comes from our private projections of what we would do if we had superior intelligence. Nevertheless an AI may want no function to harm , besides it perceived us as a menace to its private goals, just like vitality conservation or optimization.”

We moreover talked about what happens when AI strategies hit the proverbial wall of overfitting, an thought in AI the place a model turns into so attuned to its teaching info that it loses its ability to generalize new, unseen knowledge. Jeanette in distinction this to hyper-focus in individuals, explaining how trauma may end up in over-sensitization to explicit stimuli, similar to overfitting in AI. “Merely as a person who’s been by trauma can change into overly attuned to hazard,” she outlined, “an AI model can change into too specialised, shedding its flexibility in decision-making.”

The dialog with Jeanette was nothing wanting mind-expanding. It was a reminder that whereas we may be racing in the direction of AGI, we’re nonetheless working with fashions which might be basically completely totally different from human cognition. As she eloquently acknowledged, “We should always don’t forget that AI strategies, no matter how superior, are constructed on a foundation of calculations and probabilities. Human intelligence, nonetheless, is rooted in a far more superior interplay of biology, experience, and emotion.”

I truly wanted to elucidate the importance of the limbic thoughts and the way in which it ties into episodic memory. The limbic thoughts, which governs our emotions and drives, performs an important operate in how we course of and recall experiences. Episodic memory is what permits us to remember not merely information, nonetheless your full context, the feelings, and the sensory particulars associated to explicit events in our lives. It’s rich with emotional content material materials, which is one factor that’s arduous to repeat in artificial strategies.

I outlined that when we recall a memory, it’s not solely a straightforward retrieval of information like an AI pulling up knowledge from a database. The limbic thoughts prompts, bringing inside the emotions, sensations, and even the physique’s bodily state from the time of that memory. That’s what makes human memory so dynamic, so subjective, and it’s one factor AI doesn’t pretty replicate.

Monoprint Etching (2017), Jeanette Small, Akua inks on Hahnemühle cotton paper. From her book: In Lucid Coloration: Witnessing Psilocybin Journeys

I moreover touched on the operate the limbic system performs in neuroplasticity. Trauma or very important events in our lives can actually reshape the thoughts’s neural pathways. I acknowledged, “Our limbic thoughts’s connection to episodic memory and emotion helps make clear why certain reminiscences, notably these tied to sturdy emotions, can set off behavioral patterns or physiological responses prolonged after the event.” That’s the place neuroplasticity comes into play, the thoughts’s ability to reorganize itself and kind new neural connections.

I wanted to stage out the necessary factor distinction between AI and human memory. In AI, memory is just saved info, one factor static. Nevertheless for us, memory is a dwelling, evolving course of, deeply intertwined with our emotions and our bodily state. That’s why episodic memory, dominated by the limbic thoughts, is essential to our id and decision-making. It’s one factor that AG, as superior as it will change into, nonetheless can’t merely mimic.

This was a key part of our dialogue and one in all many principal the reason why the complexity of human memory stands out so much as soon as we study it to the way in which wherein artificial strategies operate.

I moreover made it clear that the path in the direction of true AGI would require far more delicate processing and {{hardware}} to simulate the deeper layers of human thoughts train. I highlighted the immense complexity of our brains, saying, “It’s great how so much processing is required to do one factor that seems straightforward inside the human ideas, like touching, smelling, or feeling.” The current {{hardware}} we have is often insufficient in tempo or choice when as compared with the natural strategies we purpose to repeat.

I recognized that “your measuring system have to be 10 events before the signal you’re attempting to course of” to even begin to imitate the thoughts’s electrochemical processes. This concept is crucial as we check out replicating the layers of neural networks inside the thoughts. Correct now, neural networks in AI are extraordinarily simplified as compared with the large interconnectedness of our biology. I acknowledged, “We try to simulate, nonetheless we’re solely merely scratching the ground of how our neurons and their electrochemical counterparts actually function.”

The reality that human memory and sensory processing are regular and happen in parallel signifies that our strategies ought to evolve to be additional extremely efficient and multidimensional. I emphasised, “We’re capable of’t lower the processing power wished to simulate these things,” and added that “greater algorithms, additional superior circuits, and greater neural networks” are necessary if we ever hope to emulate human intelligence completely.

This episode left me with a profound sense of every the possibilities and the ethical considerations of AGI. Positive, we’re developing smarter strategies, nonetheless as Jeanette reminded us, intelligence is additional than merely pattern recognition and effectivity. It’s about understanding the nuances of experience, every acutely conscious and unconscious, and realizing that the human ideas and physique are inseparable from the larger ecosystem of life.

So the place does this go away us in our quest for AGI? Jeanette’s closing concepts have been a changing into reminder: “We’re capable of simulate intelligence, nonetheless to actually replicate the human experience requires far more than merely code. It requires understanding the fundamental variations between a machine’s motivation and a human’s lived actuality.”

That, I think about, is the necessary factor takeaway from this episode. As we proceed to develop AI utilized sciences, it’s necessary to keep in mind not merely the technical factors, nonetheless the human ones too. Finally, the target isn’t merely to assemble smarter machines, it’s to greater understand what it means to be intelligent inside the first place.

Proper right here’s a parallel guidelines of neuroscience phrases to correspond with the AI teaching phrases:

- Neural Group (AI) → Neural Circuit (Thoughts): A neighborhood of interconnected neurons inside the thoughts that processes sensory enter and generates responses.

- Weights (AI) → Synaptic Energy (Thoughts): The power of the connection between neurons, which is adjusted by synaptic plasticity based mostly totally on experience.

- Biases (AI) → Neural Thresholds (Thoughts): The minimal stimulus required to activate a neuron, influenced by components just like prior train or neurotransmitter ranges.

- Activation Function (AI) → Neural Firing (Movement Potential): {{The electrical}} signal that fires when a neuron is activated, allowing it to transmit knowledge to totally different neurons.

- Backpropagation (AI) → Hebbian Learning (Thoughts): A natural course of the place neurons alter their connections based mostly totally on the correlation between their firing patterns (often summarized as “cells that hearth collectively, wire collectively”).

- Gradient Descent (AI) → Neuroplasticity (Thoughts): The thoughts’s ability to vary and alter connections between neurons in response to learning, experience, and environmental modifications.

- Epoch (AI) → Learning Cycle (Thoughts): Repeated publicity to stimuli or observe of a course of, which reinforces neural connections over time.

- Overfitting (AI) → Over-sensitization or Hyperfocus (Thoughts): When the thoughts turns into overly attuned to explicit stimuli, leading to a lot much less flexibility or generalization in habits.

- Regularization (AI) → Pruning (Thoughts): The thoughts’s technique of eradicating pointless or weak connections between neurons to boost effectivity and learning.

- Dropout (AI) → Synaptic Downscaling (Thoughts): The thoughts’s technique of shortly weakening or silencing certain synapses to maintain up stability and steer clear of overactivation.

- Learning Cost (AI) → Cost of Synaptic Change (Thoughts): The tempo at which synaptic power modifications in response to experience or learning; modulated by neurotransmitters and thoughts plasticity mechanisms.

- Teaching Data (AI) → Sensory Experience/Environmental Enter (Thoughts): The information and stimuli the thoughts processes and learns from all by means of life.

- Validation Data (AI) → Behavioral Solutions (Thoughts): Exterior or inside strategies that informs the thoughts regarding the success or failure of an movement or selection, serving to refine habits.

- Loss Function (AI) → Error Detection and Correction (Thoughts): The thoughts’s ability to detect errors or incorrect predictions (e.g., shock or battle in expectations) and alter future habits accordingly.

- Optimization (AI) → Cognitive Effectivity (Thoughts): The thoughts’s technique of enhancing decision-making and effectivity by streamlining neural processes and reducing cognitive load.

- Generalization (AI) → Change Learning (Thoughts): The ability of the thoughts to make use of realized experience and knowledge to new, unseen situations.

- Adversarial Teaching (AI) → Coping Mechanisms/Resilience (Thoughts): The thoughts’s adaptation to tough or demanding situations by strengthening associated neural circuits to cope with adversity greater.

This parallel guidelines highlights how concepts in AI and neuroscience are deeply interconnected, with every fields sharing elementary guidelines of learning, adaptation, and decision-making.

Be a part of us as we proceed to find the cutting-edge of AI and data science with foremost consultants inside the topic. Subscribe to the AI Suppose Tank Podcast on YouTube. Would you need to affix the current as a dwell attendee and work along with pals? Contact Us