Large AI Apps: Optimizing the Databases Behind the Scenes

On this text, I describe various the most common kinds of databases that apps much like RAG or LLM depend on. I moreover current recommendations and techniques on how one can optimize these databases. Effectivity enchancment methods care for tempo, bandwidth or measurement, and real-time processing.

Some widespread kinds of databases

Vector and graph databases are among the many many hottest currently, significantly for GenAI and LLM apps. Most may even cope with duties carried out by standard databases and understand SQL and totally different languages (NoSQL, NewSQL). Some optimize for fast search and precise time.

- In vector databases, choices (the columns in a tabular dataset) are processed collectively with encoding, pretty than column by column. The encoding makes use of quantization much like truncating 64-bit precise numbers to 4-bit. The massive obtain in tempo outweighs the small loss in accuracy. Normally, in LLMs, you make the most of these DBs to retailer embeddings.

- Graph databases retailer information as nodes and node connections with weights between nodes. For instance, data graphs and taxonomies with lessons and sub-categories. In some cases, a category can have various mom or father lessons, and various subcategories, making tree buildings not acceptable. Key phrase associations are an awesome match for graph databases.

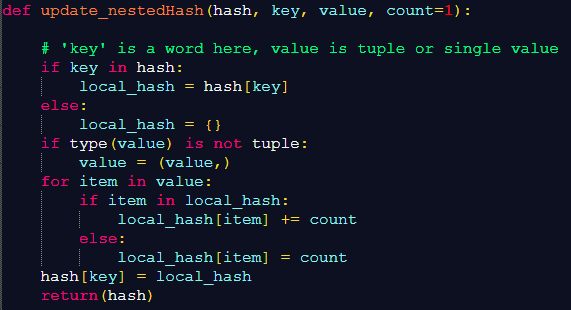

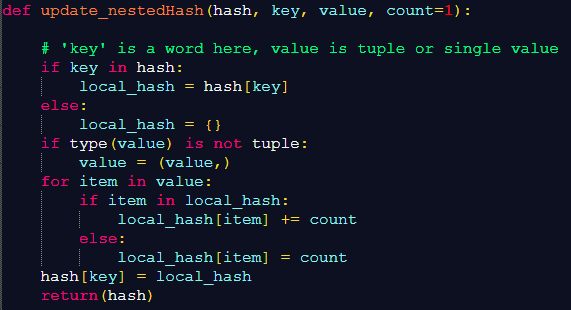

- JSON and bubble databases care for data lacking building, much like textual content material and web content material materials. In my case, I reap the benefits of in-memory key-value tables. That is, hash tables or dictionaries in Python, see Decide 1. The values are key-value tables themselves, resulting in nested hashes (see proper right here). The revenue: in RAG/LLMs, this building is much like the group of JSON textual content material entities from the enter corpus.

- Some databases think about columns pretty than rows. We identify them column databases. Some slot in memory: we identify them in-memory databases. The latter provide sooner execution. One different method to enhance effectivity is by means of a parallel implementation, for instance very like Hadoop.

- In object-oriented databases, you retailer the knowledge as objects, very like object-oriented programming languages. Database information embody objects. It facilitates direct mapping of objects in your code, to issues inside the database.

- Hierarchical databases are good at representing tree buildings, a selected form of graph. Neighborhood databases go one step further, allowing further superior relationships than hierarchical databases, particularly various parent-child relationships.

- For specific desires, take into consideration time assortment, geospatial and multimodel databases (not the equivalent meaning as multimodal). Multimodel databases assist various data fashions (doc, graph, key-value) inside a single engine. It’s possible you’ll as nicely handle Image and soundtrack repositories as databases. Normally, file repositories are de facto databases.

Quick methods to enhance effectivity

Listed below are 8 strategies to comprehend this purpose.

- Change to fully totally different construction with increased query engine, for instance from JSON or SQL to vector database. The model new engine would possibly mechanically optimize configuration parameters. The latter are essential to fine-tune database effectivity.

- Successfully encode your fields, with minimal or no loss, significantly for prolonged textual content material parts. That’s executed mechanically when switching to a high-performance database. Moreover, instead of fixed-length embeddings (with a tough and quick number of tokens per embedding), use variable measurement and nested hashes pretty than vectors.

- Eradicate choices or rows which aren’t typically used or redundant. Work with smaller vectors. Would you like 1 trillion parameters, tokens, or weights? Plenty of it is noise. They key phrase for any such cleaning is “data distillation”. In a single case, randomly eliminating 50% of the knowledge resulted in increased, further sturdy predictions.

- With sparse data much like key phrase affiliation tables, use hash buildings. If in case you might have 10 million key phrases, no wish to make use of a ten million x 10 million affiliation desk if 99.5% of key phrase associations have 0 weight or cosine distance.

- Leverage the cloud, distributed construction, and GPU. Optimize queries to avoid expensive operations. This can be executed mechanically with AI, transparently to the patron. Use cache for widespread queries or rows/columns most repeatedly accessed. Use summary instead of raw tables. Archive raw data continuously.

- Load parts of the database in memory and perform in-memory queries. That’s how I get queries working on the very least 100 cases sooner in my LLM app, in distinction to distributors. The choice and form of index moreover points. In my case, I reap the benefits of multi-indexes spanning all through various columns, pretty than separate indexes. I identify the method “good encoding”, see illustration proper right here.

- Use methods much like approximate nearest neighbor look for sooner retrieval, significantly in RAG/LLMs apps. For instance, in LLMs, that you must match an embedding coming from a instant, alongside along with your backend embeddings coming from the enter corpus. In comply with, many cases, there is no exact match, thus the need for fuzzy match. See occasion proper right here.

In regards to the Creator

Vincent Granville is a pioneering GenAI scientist and machine learning expert, co-founder of Data Science Central (acquired by a publicly traded agency in 2020), Chief AI Scientist at MLTechniques.com and GenAItechLab.comformer VC-funded authorities, creator (Elsevier) and patent proprietor — one related to LLM. Vincent’s earlier firm experience incorporates Visa, Wells Fargo, eBay, NBC, Microsoft, and CNET. Adjust to Vincent on LinkedIn.